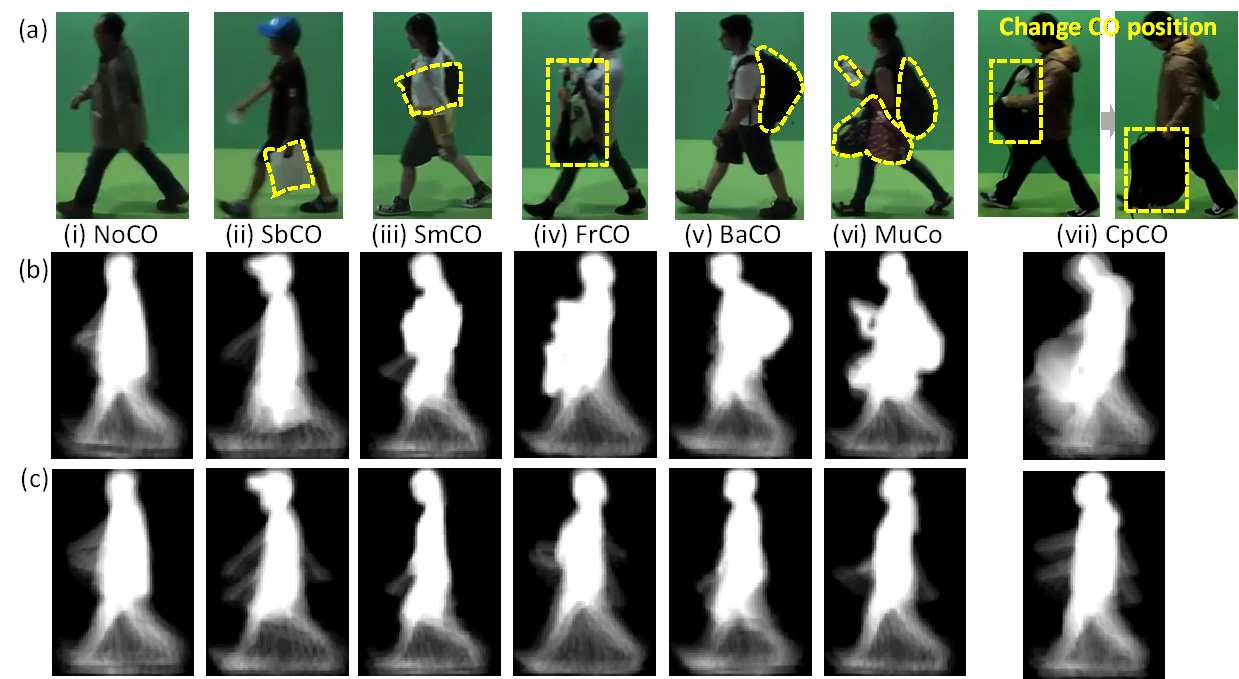

We present a super large dataset of 62,528 participants and peformance evaluations for gait recognition against various practical conditions and carrying status recognition.

We propose a method for constructing gait patterns (with Self-DTW) and a method to improve the pattern quality by eliminating the ambiguity of temporal distortion.

- Trung Thanh Ngo, Yasushi Makihara, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Yasushi Yagi,

Phase Registration in a Gallery Improving Gait Authentication,

Proc. of the International Joint Conference on Biometrics (IJCB2011), Washington D.C., USA, Oct. 2011. - Yasushi Makihara, Md.Rasyid Aqmar, Trung Thanh Ngo, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Yasushi Yagi,

Phase Estimation of a Single Quasi-periodic Signal,

IEEE Transactions on Signal Processing 62(8): 2066-2079 (2014) , - Yasushi Makihara, Trung Thanh Ngo, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Yasushi Yagi,

Phase Registration of a Single Quasi-Periodic Signal Using Self Dynamic Time Warping,

In Proc. of the 10th Asian Conf. on Computer Vision, pp.1965-1975, Queenstown, New Zealand, Nov., 2010.

We present the world largest inertial dataset of 744 participants and its performance evaluations against variety of factors, such as age, gender, sensor, etc.

- Trung Thanh Ngo, Yasushi Makihara, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Yasushi Yagi,

Performance Evaluation of Gait Recognition using the Largest Inertial Sensor-based Gait Database,

IAPR/IEEE International Conference on Biometrics (ICB2012), New Delhi, India, Apr.2012. - Trung Thanh Ngo, Yasushi Makihara, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Yasushi Yagi,

The Largest Inertial Sensor-based Gait Database and Performance Evaluation of Gait-based Personal Authentication,

Pattern Recognition 47 (2014), pp. 228-237

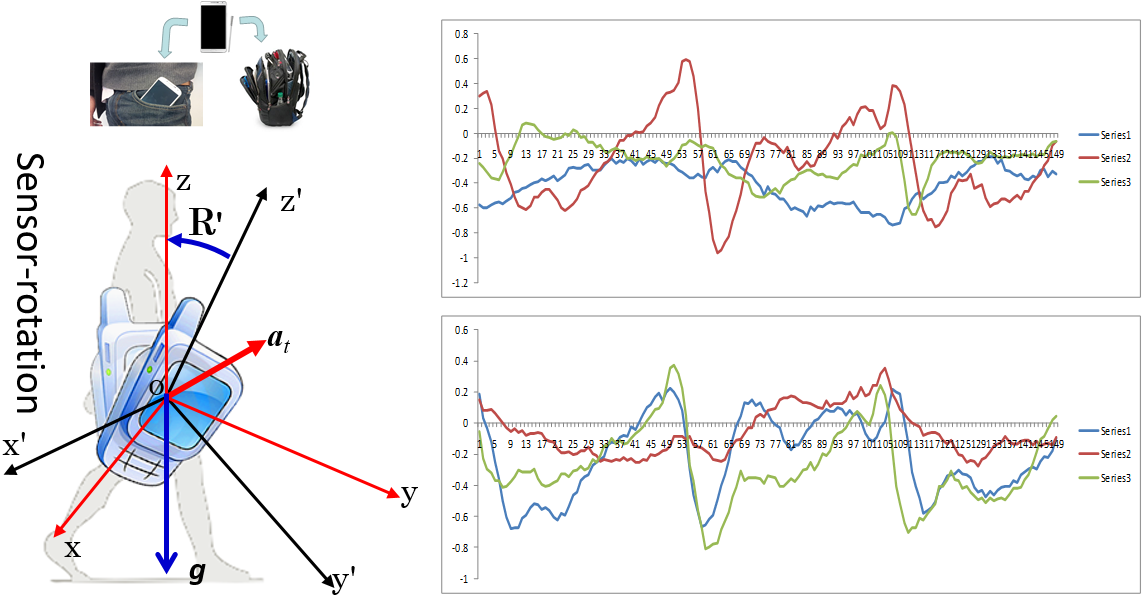

An actual gait signal is always subject to change dueto many factors including variation of sensor attachment. In this research, we tackle to the practical sensor-orientation inconsistency, for which signal sequences are captured at different sensor orientations. We present a method for registering gait signals of rotated sensors and its application in gait recognition.

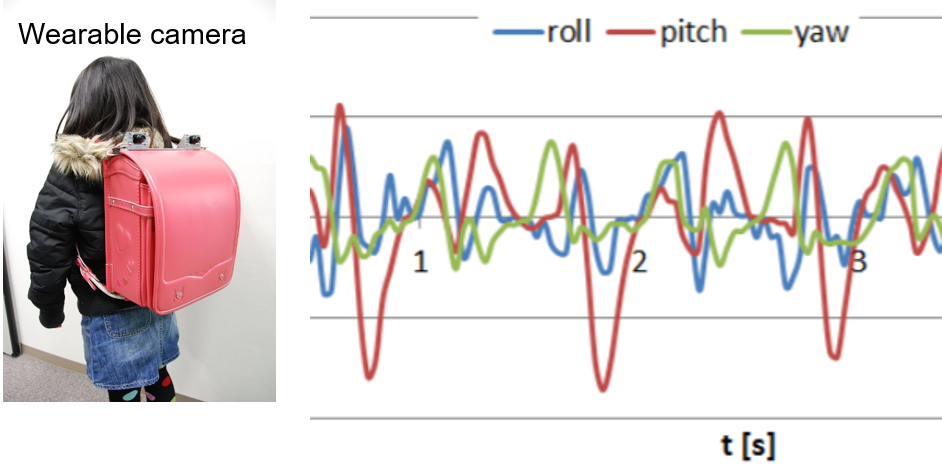

Inspired by sensor-based gait recognition, we present a solution for gait recognition with wearable camera. We employ a camera motion estimation method and use the motion signal to recognize the person who is wearing the camera.

- Kohei Shiraga, Trung Thanh Ngo, Ikuhisa Mitsugami, Yasuhiro Mukaigawa, Yasushi Yagi,

Gait-based Person Authentication by Wearable Cameras,

The ninth International Conference on Networked Sensing Systems (INSS2012), June 2012.

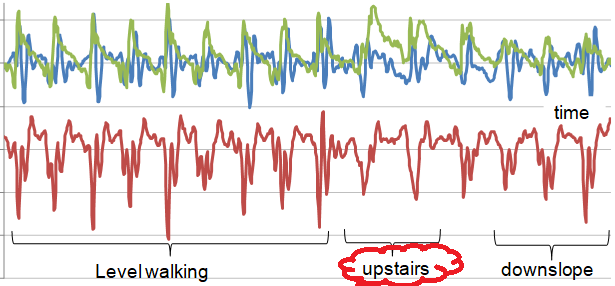

The research tackles a challenging problem of inertial sensor-based recognition for similar gait action classes (such as walking on flat ground, up/down stairs, and up/down a slope). We solve three drawbacks of existing methods in the case of gait actions: the action signal segmentation, the sensor orientation inconsistency, and the recognition of similar action classes.

- Trung Thanh Ngo, Yasushi Makihara, Hajime Nagahara, Ryusuke Sagawa, Yasuhiro Mukaigawa, Yasushi Yagi,

Inertial-sensor-based Walking Action Recognition using Robust Step Detection and Inter-class Relationships,

Proc. 21th Int. Conf. on Pattern Recognition, Tsukuba, Japan, Nov. 2012. - Trung Thanh Ngo, Yasushi Makihara, Hajime Nagahara, Yasuhiro Mukaigawa, Yasushi Yagi,

Similar gait action recognition using an inertial sensor,

Pattern Recognition 48(4): 1289-1301 (2015) ,

- Yasushi Makihara, Daigo Muramatsu, Haruyuki Iwama, Trung Thanh Ngo, Yasushi Yagi, Md. Altab Hossain,

Score-level fusion by generalized Delaunay triangulation,

IEEE/IAPR International Joint Conference on Biometrics (IJCB), Sept. 2014

- Thanh Trung Ngo, Md Atiqur Rahman Ahad, Anindya Das Antar, Masud Ahmed, Daigo Muramatsu, Yasushi Makihara, Yasushi Yagi, Sozo Inoue, Tahera Hossain, Yuichi Hattori,

OU-ISIR Wearable Sensor-based Gait Challenge: Age and Gender,

The 12th IAPR International Conference On Biometrics (ICB), Jun. 2019